Automated Fixation Annotation of Eye-Tracking Videos

A novel approach that integrates the capabilities of two foundation models, YOLOv8 and Mask2Former, as a pipeline to automatically annotate fixation points without requiring additional training or fine-tuning.

Click on this link to download the zipped folder containing the data and code. Set up your Python environment using the environment.yml file. Here is a link explaining how to set up a conda environment using a yml file.

Once your environment is set up, navigate to the folder named “code” and open the annotate.ipynb file using Jupyter Notebook.

Running the Pipeline

First cell imports the libraries (ensure that the utils.py file is located in the same directory as the annotate.ipynb).

# Imports

import os

import matplotlib

import time

# import from own scripts

from utils import clean_directory, create_directory, idt, predict_annotation, create_video, check_idt, extract_frames, evaluate_segmentationsIn the second cell, change the base_path = '../data/' to where you have your data (for example base_path = 'C:/Users/Username/Desktop/data'):

# Environment settings

verbose = True

super_verbose = False

# int or None

stop_frame = None # int frame number or None, after which the frame extraction stops to test annotation on short video-clips

# Reduce figure opening, to prevent RAM overload on certain hardware

matplotlib.use("Agg")

# data base path

base_path = '../data/'Define all input and output file names, or leave them as is:

# input filenames: these must correspond to the existing video and gaze files and be placed in the base_path folder

gaze_file = 'gaze_positions.csv'

video_file = 'worldTrim.mp4'

# Include already created idt-file by setting it to: 'fixation_gaze_positions.csv' or None

idt_filename = 'fixation_gaze_positions.csv'

# filenames for files created during the script

fixation_csv_file = 'fixation_gaze_positions.csv'

output_csv_name = 'labeled_data_semSeg_yolo.csv'

# Create folder path locations

output_path = os.path.join(base_path, 'outputs/')

extracted_frames_path = os.path.join(base_path, 'extracted_frames/')

fig_path = os.path.join(output_path, 'saved_frames_semSeg_yolo')

# create necessary directories

create_directory(directory_name=output_path, verbose=verbose)

create_directory(directory_name=extracted_frames_path, verbose=verbose)

create_directory(directory_name=fig_path, verbose=verbose)Two files are needed to run the code:

- Eye Data (A or B)

- A: Raw Gaze Positions Export File (optional: In case you already have fixations, you can omit this step/file and use the Fixation File): In the sample data folder, this file is named

gaze_positions.csv. It should contain the following columns:gaze_timestamp: A float representing the timestamp of the recorded gaze (the start of your recording).world_index: The frame number from the scene camera to which this gaze point belongs.norm_pos_x,norm_pos_y: The normalized x and y coordinates of the gaze with respect to the frame size.

- B: Fixation File (In case you already have fixations, otherwise use A): If you have already computed the fixations or have an exported fixation file from your eye-tracker, you can use it as

fixation_gaze_positions.csvfor the annotation. In this case the file should contain the following columns (If not, this file will be created as part of the pipeline using the IDT algorithm):world_index: is the frame index of the detected fixationx_mean: X coordinate of the fixation (normalized between 0 and 1 based on the frame dimensions)y_mean: Y coordinate of the fixation (normalized between 0 and 1 based on the frame dimensions)

- A: Raw Gaze Positions Export File (optional: In case you already have fixations, you can omit this step/file and use the Fixation File): In the sample data folder, this file is named

- Scene Video Recording (mandatory): In the sample data folder, this file is named

worldTrim.mp4.

The next cell checks if the fixation file exists. If it doesn’t, the cell will compute the fixations using the IDT algorithm with default threshold values: dis_threshold=0.02 and dur_threshold=100. This will generate a fixation file containing the following information: id, time, world_index, x_mean, y_mean, start_frame, end_frame, and dispersion:

...

# if no idt file has been provided, calculate it from a gaze file

if idt_filename is None:

if verbose:

print('Creating fixation file by IDT.')

idt(data_path=os.path.join(base_path, gaze_file), dis_threshold=0.02, dur_threshold=100, file_basename=gaze_file,

output_path=base_path, verbose=verbose)

...From here on, the Jupyter Notebook does not require any further input from you. The process will annotate the fixations. Be patient, this will take a while. Depending on the system, it can take a multiple of the video time to finish.

Next, the frames for each fixation point are extracted. The function extract_frames exports only those frames on which a fixation is detected:

...

if verbose:

print('Extracting frames from video.')

extract_frames(base_path=base_path, csv_path=fixation_csv_file, video_path=video_file,

extracted_frames_path=extracted_frames_path, stop_frame=stop_frame, verbose=verbose)

...Then the main function for annotating the frames using late fusion of YOLO and Mask2Former is predict_annotation:

...

extracted_data = predict_annotation(base_path=base_path, fixation_path=fixation_csv_file,

frame_path=extracted_frames_path, savefig_path=fig_path,

create_sequence_images=True, verbose=verbose)

...This function creates a new folder with the annotated frames as .png files and a CSV file containing the following columns: frame_number, point_x, point_y, yolo_label, segmentation_label, bounding_box_max_iou, yolo_conf, max_iou_box_area_ratio, and mask_coverage.

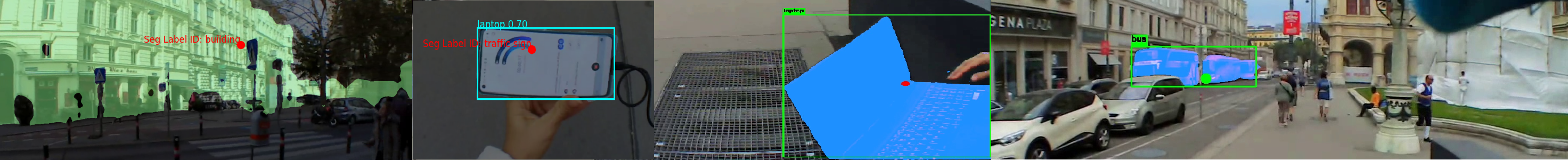

You can use create_video function to stitch back the annotated frames to have a cool video like this!

A Simple Guideline for Selecting the Final Label

This is a general recommendation that you can follow while having the output ready:

- If the

yolo_labeland thesegmentation_labelagree, then just select one. - If either the

yolo_labelor thesegmentation_labelexists, choose the existing one. - If the

yolo_labeland thesegmentation_labeldisagree:- If there are specific objects of interest (e.g., tablet, cell phone, laptop), create a list of these items. If either model predicts an object from this list, retain that prediction.

- If the predicted label is not in the list of items you are interested in, apply thresholding: for example, If

mask_coverageis above 70% andyolo_confis higher than 70%, choose YOLO’s prediction.

priority_objects = ["tablet", "cell phone", "laptop"]

def select_final_label(yolo_label, segmentation_label, yolo_conf, mask_coverage):

# Check if both labels agree

if yolo_label == segmentation_label:

return yolo_label

# Check if either label exists

if yolo_label and not segmentation_label:

return yolo_label

if segmentation_label and not yolo_label:

return segmentation_label

# Check if the object is in the priority list

if yolo_label in priority_objects:

return yolo_label

if segmentation_label in priority_objects:

return segmentation_label

# Apply thresholding if the label is not in the priority list

if mask_coverage > 0.7 and yolo_conf > 0.7:

return yolo_label

# Default to segmentation label if no other conditions are met

return segmentation_label